Deepseek - Not For everybody

페이지 정보

본문

With a deal with defending clients from reputational, financial and political harm, free deepseek uncovers emerging threats and dangers, and delivers actionable intelligence to assist guide clients by difficult conditions. They found this to help with skilled balancing. Just like prefilling, we periodically decide the set of redundant specialists in a sure interval, primarily based on the statistical professional load from our online service. Due to the effective load balancing strategy, DeepSeek-V3 retains a very good load stability throughout its full training. Although the dequantization overhead is significantly mitigated combined with our precise FP32 accumulation strategy, the frequent knowledge movements between Tensor Cores and CUDA cores still limit the computational effectivity. • Transporting knowledge between RDMA buffers (registered GPU memory areas) and enter/output buffers. This physical sharing mechanism additional enhances our reminiscence efficiency. Additionally, we leverage the IBGDA (NVIDIA, 2022) expertise to further decrease latency and improve communication effectivity. Delayed quantization is employed in tensor-smart quantization frameworks (NVIDIA, 2024b; Peng et al., 2023b), which maintains a historical past of the maximum absolute values throughout prior iterations to infer the present worth.

With a deal with defending clients from reputational, financial and political harm, free deepseek uncovers emerging threats and dangers, and delivers actionable intelligence to assist guide clients by difficult conditions. They found this to help with skilled balancing. Just like prefilling, we periodically decide the set of redundant specialists in a sure interval, primarily based on the statistical professional load from our online service. Due to the effective load balancing strategy, DeepSeek-V3 retains a very good load stability throughout its full training. Although the dequantization overhead is significantly mitigated combined with our precise FP32 accumulation strategy, the frequent knowledge movements between Tensor Cores and CUDA cores still limit the computational effectivity. • Transporting knowledge between RDMA buffers (registered GPU memory areas) and enter/output buffers. This physical sharing mechanism additional enhances our reminiscence efficiency. Additionally, we leverage the IBGDA (NVIDIA, 2022) expertise to further decrease latency and improve communication effectivity. Delayed quantization is employed in tensor-smart quantization frameworks (NVIDIA, 2024b; Peng et al., 2023b), which maintains a historical past of the maximum absolute values throughout prior iterations to infer the present worth.

Notably, our wonderful-grained quantization strategy is very in line with the idea of microscaling formats (Rouhani et al., 2023b), whereas the Tensor Cores of NVIDIA subsequent-generation GPUs (Blackwell sequence) have introduced the help for microscaling formats with smaller quantization granularity (NVIDIA, 2024a). We hope our design can function a reference for future work to maintain tempo with the most recent GPU architectures. Then, we present a Multi-Token Prediction (MTP) training objective, which we've got noticed to boost the general performance on analysis benchmarks. However, MTP might enable the mannequin to pre-plan its representations for higher prediction of future tokens. 2024), we examine and set a Multi-Token Prediction (MTP) objective for DeepSeek-V3, which extends the prediction scope to multiple future tokens at every position. In addition, we additionally implement particular deployment strategies to make sure inference load steadiness, so DeepSeek-V3 also doesn't drop tokens throughout inference. Therefore, we recommend future chips to help wonderful-grained quantization by enabling Tensor Cores to receive scaling elements and implement MMA with group scaling.

Notably, our wonderful-grained quantization strategy is very in line with the idea of microscaling formats (Rouhani et al., 2023b), whereas the Tensor Cores of NVIDIA subsequent-generation GPUs (Blackwell sequence) have introduced the help for microscaling formats with smaller quantization granularity (NVIDIA, 2024a). We hope our design can function a reference for future work to maintain tempo with the most recent GPU architectures. Then, we present a Multi-Token Prediction (MTP) training objective, which we've got noticed to boost the general performance on analysis benchmarks. However, MTP might enable the mannequin to pre-plan its representations for higher prediction of future tokens. 2024), we examine and set a Multi-Token Prediction (MTP) objective for DeepSeek-V3, which extends the prediction scope to multiple future tokens at every position. In addition, we additionally implement particular deployment strategies to make sure inference load steadiness, so DeepSeek-V3 also doesn't drop tokens throughout inference. Therefore, we recommend future chips to help wonderful-grained quantization by enabling Tensor Cores to receive scaling elements and implement MMA with group scaling.

In an effort to facilitate environment friendly training of DeepSeek-V3, we implement meticulous engineering optimizations. So as to cut back the memory footprint during coaching, we make use of the next methods. Along side our FP8 coaching framework, we additional cut back the memory consumption and communication overhead by compressing cached activations and optimizer states into lower-precision codecs. Besides, some low-price operators can also make the most of the next precision with a negligible overhead to the overall training cost. While these high-precision parts incur some reminiscence overheads, their impact will be minimized via environment friendly sharding throughout multiple DP ranks in our distributed training system. To cut back the reminiscence consumption, it is a natural choice to cache activations in FP8 format for the backward cross of the Linear operator. As a regular follow, the enter distribution is aligned to the representable range of the FP8 format by scaling the maximum absolute worth of the input tensor to the utmost representable value of FP8 (Narang et al., 2017). This methodology makes low-precision coaching highly sensitive to activation outliers, which may closely degrade quantization accuracy.

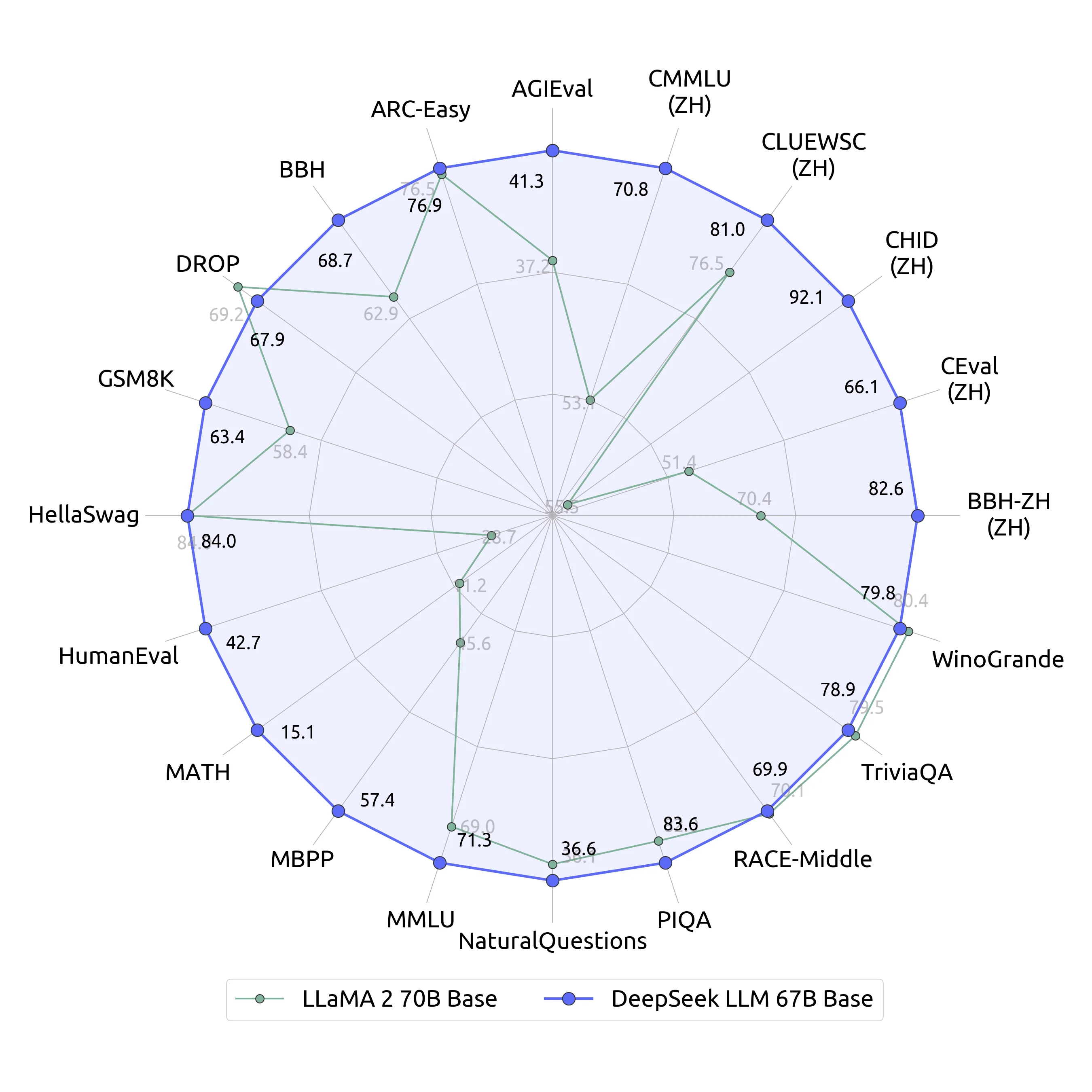

As mentioned before, our superb-grained quantization applies per-group scaling components along the inside dimension K. These scaling components might be effectively multiplied on the CUDA Cores because the dequantization process with minimal extra computational value. One key modification in our technique is the introduction of per-group scaling components alongside the interior dimension of GEMM operations. Based on it, we derive the scaling issue and then quantize the activation or weight online into the FP8 format. For the MoE all-to-all communication, we use the same method as in coaching: first transferring tokens across nodes through IB, after which forwarding among the many intra-node GPUs through NVLink. Furthermore, DeepSeek-V3 achieves a groundbreaking milestone as the primary open-supply model to surpass 85% on the Arena-Hard benchmark. 0.001 for the first 14.3T tokens, and to 0.0 for the remaining 500B tokens. We permit all fashions to output a maximum of 8192 tokens for every benchmark. In the present Tensor Core implementation of the NVIDIA Hopper structure, FP8 GEMM (General Matrix Multiply) employs fixed-level accumulation, aligning the mantissa merchandise by proper-shifting primarily based on the maximum exponent earlier than addition. free deepseek-V3 is skilled on a cluster outfitted with 2048 NVIDIA H800 GPUs. Each node within the H800 cluster contains 8 GPUs connected by NVLink and NVSwitch within nodes.

If you enjoyed this information and you would certainly like to obtain more information concerning ديب سيك kindly visit our own website.

- 이전글What Is The Reason? Leather 4 Seater Sofa Is Fast Becoming The Most Popular Trend For 2024 25.02.01

- 다음글الدر المنثور/سورة البقرة/الجزء الثاني 25.02.01

댓글목록

등록된 댓글이 없습니다.